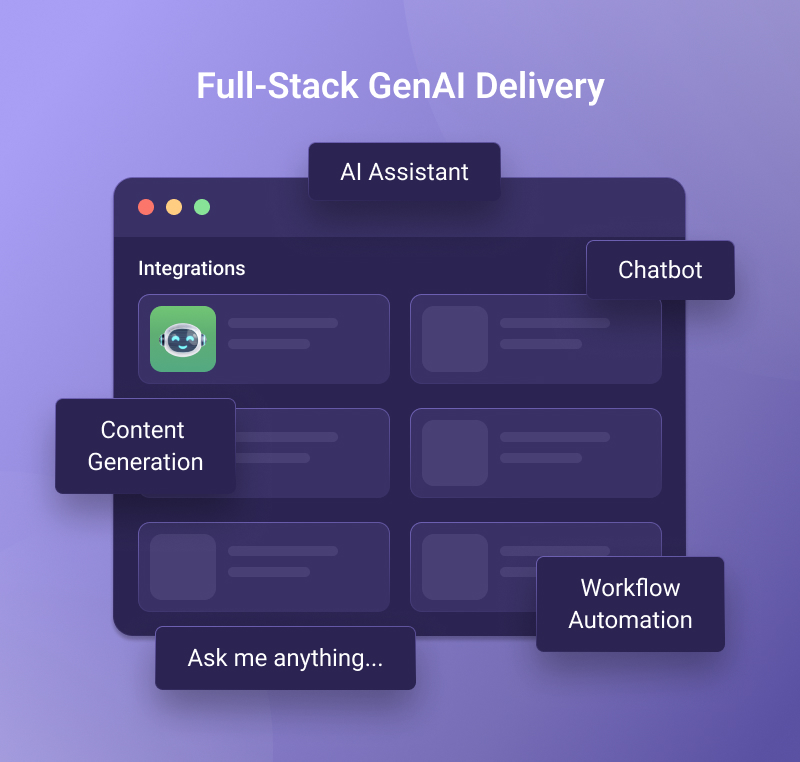

OpenAI API Integration & Full-Stack GenAI Delivery

Good fit if you want to ship GenAI features that matter to customers or teams, and don’t want to build the infrastructure alone.

If needed, we support user-supplied OpenAI API keys, secure token capture, and usage metering inside your product.

If needed, we support user-supplied OpenAI API keys, secure token capture, and usage metering inside your product.Belitsoft designs, builds, and deploys OpenAI-powered features into your real product or internal platform: an AI assistant inside your app, a domain-specific chatbot for customer service or HR, an auto-generated content engine (emails, reports, briefs), a smart form or workflow agent (fills, validates, suggests), and user-connected AI workflows (where your users bring their own OpenAI keys to unlock features inside your SaaS).

We define the job-to-be-done, build the GenAI logic using OpenAI’s best-fit model (GPT-4 Turbo, Whisper, DALL·E, etc.), tune it for your domain or data (prompt stack, RAG, fine-tuning, Assistants API), deploy it inside your UX (web, mobile, chat, or API), and wrap it like a real service (with API key management, rate limit handling, error fallback, token tracking, logging, and usage transparency). We monitor, improve, and support post-launch.

You’ll get a real product that works on your data, in your domain, under your constraints, and uses the latest OpenAI APIs (function calling, persistent threads, file search, multimodal inputs).

OpenAI Integration Consulting Services

Not sure where GenAI fits? We run short, focused discovery cycles to help you define what GenAI can actually do inside your workflows, what jobs are worth automating, what model setup supports your constraints (data, users, stack).

If you're a multi-department company with diverse tools, systems, or user types, we’ll architect how each assistant speaks, thinks, and routes queries, how the data is accessed securely, which model configurations (chat, RAG, function calling) support which use case. You’ll know what to build, why it matters, what it connects to, where the risks are.

OpenAI Integration Support

Every GenAI system needs maintenance. We support yours like a software - monitor token usage, model performance, and failure points; log prompt/response pairs for drift detection and tuning; add retry, fallback, and escalation paths; tune prompt stacks as your data and users change; and patch hallucination risks and quality regressions.

You get structured change control, visibility into what the model is doing, and controlled evolution - not surprise failures. Every deployment includes domain logic, error handling, real-time feedback hooks, and the ability to scale into production.

GenAI Prototyping

Good fit if you want to see if GenAI actually helps your team, without wasting a quarter on planning.

Typical use cases: a chat agent trained on internal docs or a help center, a GPT-powered analytics assistant for ops or sales, a copy or email assistant tuned for your industry, or a translation and localization layer for support or content.

Belitsoft scopes the smallest possible test that proves value, builds a usable prototype in several weeks or days, runs it with real inputs to measure output quality, wraps it with a light UI or API layer, and from there keeps it, scales it, or tosses it.

We use current OpenAI capabilities: Assistants API, file search, retrieval, tools. No vague PoCs. No overbuild. One job, one tool, one result until the prototype proves ROI.

Just want to connect a few tools and automate a simple task with GenAI? We do that too. Examples: trigger (new form submission, Slack message, etc.) → send to GPT → answer pulled from docs → auto-generate response (email to lead, product description synced to CMS, etc.). Built in days.

You’ll get a working GenAI prototype fast. We define one core use case, build it, and show results using your real data.

Tried Fiverr? Need it done right? If your OpenAI integration was built by a freelancer and now it’s buggy, slow, or missing features - we’ll fix it. We clean up half-built GPT bots, stabilize API calls, and rewire spaghetti logic so it scales.

Tried Fiverr? Need it done right? If your OpenAI integration was built by a freelancer and now it’s buggy, slow, or missing features - we’ll fix it. We clean up half-built GPT bots, stabilize API calls, and rewire spaghetti logic so it scales.

OpenAI-Augmented Process Automation

Good fit if you already use automation tools and want to inject GenAI, or if you’re testing AI in operations with no dev team at all.

Belitsoft plugs OpenAI into your existing automation stack (like UiPath, N8N, Make, ApiX-Drive, Zapier) or builds custom tools for your workflows.

Typical use cases: auto-drafting replies or summaries inside business tools, AI-powered triage for support tickets or form data, document cleanup, classification, or routing, natural language interfaces to trigger actions, and lightweight AI flows across tools you already use.

We map your workflows and tasks, identify where GenAI can save time or reduce steps, add OpenAI into your automation flow (via plugin, script, or low-code integration), wrap results with controls (error handling, rollback, edits), ship and track in production. Then iterate.

You’ll get real automation with no hallucination surprises. We add logic, filters, and checks, built using OpenAI APIs like chat completions, embeddings, or moderation filters, and compatible with enterprise RPA tools, iPaaS platforms, or entry-level builders.

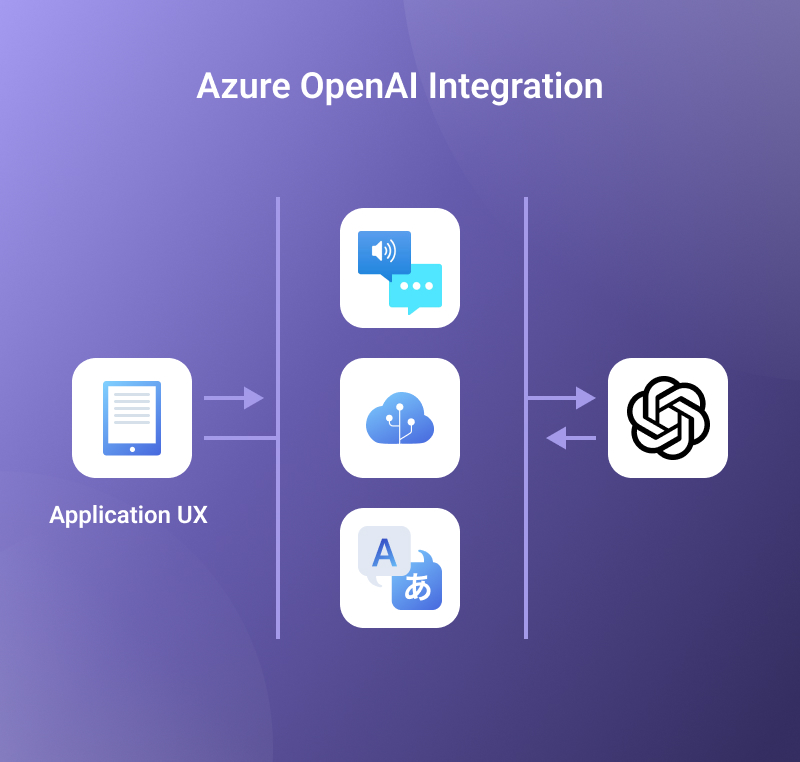

Enterprise GenAI: Azure OpenAI Integration

For enterprise teams already using Microsoft Azure, organizations with compliance, audit, or data residency requirements, and companies that cannot send data to OpenAI SaaS but still want GPT-class capabilities.

Belitsoft designs, deploys, and manages OpenAI-powered features inside your existing Azure ecosystem. This includes secure, region-compliant deployment using Azure OpenAI.

Typical use cases: multi-modal internal AI document agents (PDFs, search, forms) integrated with on-prem or hybrid data storage, hosted entirely inside your tenant; secure summarization or triage of PHI/PII in regulated workflows; GPT integration into Microsoft platforms (SharePoint, Power BI, Teams, Fabric); AI-powered data access layers on top of Azure AI Search or enterprise data lakes.

We scope the job to be done (document QA, triage, insight extraction, etc.), provision Azure OpenAI resources inside your environment, build the GenAI logic using GPT-4, embeddings, or the Assistants API, plug it into your stack (via Azure AI Search, Function Apps, or your web frontends), add enterprise logic (private endpoints, RBAC and role isolation, logging and moderation layers, rate-limited queues + audit logs), deploy, monitor, and iterate.

Enterprise-grade GenAI, like Azure OpenAI, runs inside a secure tenant. Prompts are not stored. Data is not reused. Models are deployed into the company’s cloud perimeter: that difference isn’t technical, but existential.

Enterprise-grade GenAI, like Azure OpenAI, runs inside a secure tenant. Prompts are not stored. Data is not reused. Models are deployed into the company’s cloud perimeter: that difference isn’t technical, but existential.

OpenAI on AWS

Good fit if you want OpenAI/GPT-4 performance but need to keep sensitive data inside AWS before it leaves your cloud.

You’ll keep your data safe, your team compliant, and your access to world-class models open.

You’ll keep your data safe, your team compliant, and your access to world-class models open.If needed, we support AWS-native redaction pipelines, tokenized PII masking, OpenAI API call control, and full audit logging.

Belitsoft designs and deploys a compliant integration path between your AWS-based systems and OpenAI’s API, so you can use, for example, GPT-4 for document analysis, chat agents, summarization, research, reporting, or smart workflows without exposing raw prompts outside your security perimeter.

We insert a PII-scrubber layer using Bedrock-hosted models (Claude, Mistral, Titan) or lightweight redaction logic (regex, heuristics, entity detection), sanitize prompts inside your environment, and forward only clean requests to OpenAI. The response is logged, optionally re-tokenized, and returned to your app.

You’ll get an auditable, zero-infrastructure solution deployed in your own AWS account, with IAM control, pay-per-use cost, token usage visibility, and optional fallback to Claude models if OpenAI is unreachable or restricted.

Integrate the OpenAI (ChatGPT) API with the Google Cloud

For engineering teams building production-grade GenAI features inside Google Cloud, organizations that need OpenAI integrated across apps, workflows, and data pipelines, and teams that require secure, observable, AI applications.

Belitsoft designs, containerizes, and deploys GenAI-powered applications in your GCP environment, integrating OpenAI with your infrastructure, tools, and compliance requirements.

This includes app development (Flask, FastAPI, etc.), containerization and CI/CD (Docker, Cloud Build), serverless deployment (Cloud Run), secret handling (GCP Secret Manager), and monitoring and scaling (Cloud Logging, Cloud Run autoscale). Use cases include a GPT-4 document summarizer deployed as a Google Chat assistant, an API-triggered RAG pipeline with a vector DB (Pinecone, Weaviate, FAISS, Qdrant, etc.), a GPT-based content generator or assistant embedded in internal dashboards, and workspace-integrated agents that answer queries, triage data, or explain reports.

We build the AI around your architecture — not the other way around.

We build the AI around your architecture — not the other way around.

OpenAI Integration Use Cases

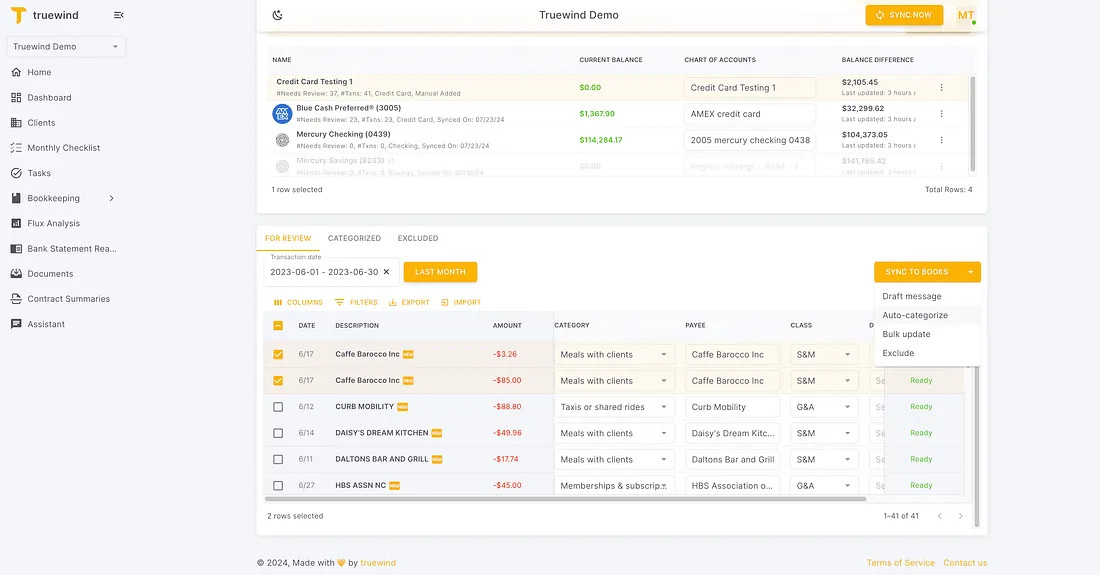

Integrating such a solution into a B2B SaaS product enables building a sustainable business model, gradually scaling and deepening automation in accounting processes. Truewind relies on the OpenAI API and vector databases.

Integrating such a solution into a B2B SaaS product enables building a sustainable business model, gradually scaling and deepening automation in accounting processes. Truewind relies on the OpenAI API and vector databases. OpenAI Fintech & Accounting

Most accountants aren’t asking whether AI matters, they’re asking where it fits. Early majority is now rolling out secure models over proprietary data. Use cases range from invoice processing and contract extraction to margin forecasting and audit triage. AI in accounting won’t be about who has the best chatbot, but about who has the fastest insight-to-decision loop, the leanest planning cycle, and the tightest risk signal detection.

Startups in the finance and accounting industry are emerging. They not only attract investment ($17mln) but also have real clients. One of them is Truewind — founded just around 2 years ago (at the time of writing). A core component of its offer for companies working in accounting is document classification, assigning categories, displaying a confidence score for classification accuracy, and explaining why a particular classification was applied.

Frequently Asked Questions

OpenAI’s public API is hosted by OpenAI (not Microsoft) and runs on OpenAI’s own infrastructure.

Microsoft hosts a separately hosted, enterprise-grade version of OpenAI models, deployed inside Azure datacenters and accessed through Azure Resource Manager using Azure-specific keys.

OpenAI licenses the models to Microsoft. Microsoft gets access to the model weights and serving stack via a commercial agreement.

Microsoft doesn’t rewrite the models, they run OpenAI’s models as-is, but inside Microsoft Azure.

Why does Azure OpenAI exist?

Because large enterprise and government clients often say: "We can’t send data to OpenAI’s API over the public internet. We need regional hosting, VNet isolation, audit trails, SOC 2 compliance, HIPAA, etc."

So Microsoft says: "We’ll run OpenAI’s models inside our own cloud. That way, your data never leaves Azure, and you stay compliant".

AWS has no partnership with OpenAI. You will not find OpenAI or GPT available in Bedrock, SageMaker, or any AWS-native endpoint. OpenAI’s infrastructure runs outside of AWS. AWS Bedrock documentation (only lists Anthropic, Mistral, Meta, Amazon Titan, Cohere, Stability). OpenAI docs (only list OpenAI-hosted and Azure-hosted access). AWS partners with multiple model providers but not OpenAI.

Bedrock includes Anthropic Claude (Claude 3 Opus, Sonnet, Haiku), Mistral (Mistral 7B, Mixtral), Amazon Titan (text, embeddings), Meta Llama, Cohere Command R, Stability Stable Diffusion. These are all hosted and managed by AWS, billed via your AWS account, secured with IAM, and run in AWS regions.

The only way to get GPT-4 while working from AWS is to call "https://api.openai.com/v1/chat/completions ", which is OpenAI’s public endpoint, hosted outside your VPC.

Your Lambda, ECS task, or EC2 instance calls out to the internet. The data leaves AWS, hits OpenAI’s infra. OpenAI returns the result over HTTP. No IAM, no region control, no private endpoint. It’s like calling Stripe, Twilio, or any other SaaS API.

Most people assume “AWS must have GPT too” - but they don’t, by design.

Our Clients' Feedback

We have been working for over 10 years and they have become our long-term technology partner. Any software development, programming, or design needs we have had, Belitsoft company has always been able to handle this for us.

Founder from ZensAI (Microsoft)/ formerly Elearningforce